NVIDIA has expanded its CUDA platform to include support for RISC-V processors, which could be a game-changer in the AI industry. This significant milestone breaks the dominance of x86 and ARM architectures, traditionally the go-to platforms for AI and data-center CPUs. Intel and AMD have ruled the x86 market, while NVIDIA and Big Tech rely on specialized ARM solutions.

But this shift in support for RISC-V opens up a new chapter.

CUDA, the powerhouse of AI computation, is now available on RISC-V, allowing this open-source architecture to enter the AI space with a solid foundation. By supporting CUDA, RISC-V eliminates licensing fees that have historically hindered smaller companies and startups from accessing AI tech. This makes it an appealing choice for developers, as they can use and modify the open-source instruction set without worrying about royalties.

Another advantage of RISC-V is its scalability. With a minimal instruction set, it simplifies chip design and verification, speeding up the development process. As AI continues to evolve, RISC-V’s low-cost and efficient nature makes it ideal for edge computing, especially in platforms where large-scale clusters dominated by ARM and x86 solutions are not as feasible.

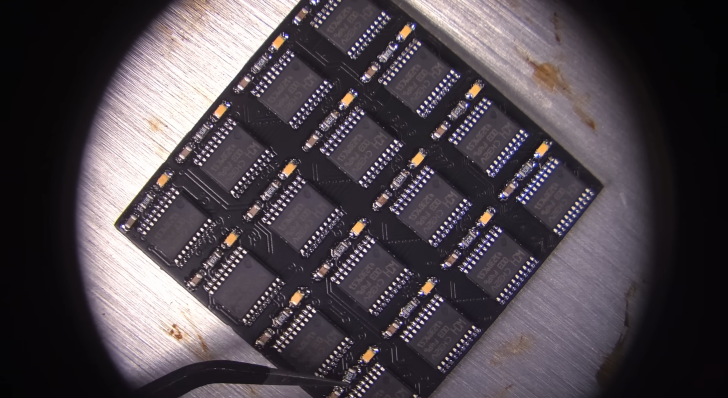

Tenstorrent, a company led by the renowned Jim Keller, has already started using RISC-V in its AI chips, providing an affordable alternative to expensive, proprietary systems. Tenstorrent’s Wormhole AI chips, such as the n150 and n300, show just how cost-effective and powerful these RISC-V-based solutions can be. Moreover, RISC-V has become particularly popular among Chinese developers, who are drawn to its open-source nature. With CUDA support, the interest in RISC-V is set to grow even more.